translate sql query to pandas dataframe code: A Practical Step‑by‑Step Guide

Ever stared at a SQL query and wished you could just drop it into a Jupyter notebook and get a tidy DataFrame? Yeah, I’ve been there—typing SELECT statements feels comfortable, but switching to pandas often feels like learning a new language on the fly. That’s why learning how to translate sql query to pandas dataframe code matters.

You probably know the pain of rewriting every WHERE clause, GROUP BY, and JOIN as a series of .loc filters, merge calls, and agg dictionaries. It’s easy to get lost in syntax and end up with a dataframe that looks nothing like the table you started with. The good news? You don’t have to reinvent the wheel every time.

Imagine you have a sales table and you need total revenue per region for the last quarter. In SQL you’d write something like SELECT region, SUM(revenue) FROM sales WHERE date >= ‘2023-10-01’ GROUP BY region. With pandas, the equivalent takes a few lines—read_csv, filter the date column, groupby(‘region’)[‘revenue’].sum(). It’s straightforward once you see the pattern, and you’ll start spotting it everywhere.

But spotting the pattern is only half the battle. You also need to handle data‑type quirks, missing values, and the occasional nested sub‑query that doesn’t map cleanly to a single pandas operation. That’s where a smart assistant can save you minutes—or hours. SwapCode’s Code Explainer tool can take your SQL snippet and walk you through the pandas translation step by step, highlighting the exact functions you need.

So, what’s the first move? Grab a simple SELECT statement you already use, paste it into the explainer, and watch how it breaks the query into pandas commands. You’ll see the DataFrame creation, the boolean mask for filters, and the groupby‑agg chain all laid out. It feels like having a teammate who just gets the syntax you’re after.

Once you’ve got the basics down, start layering complexity—add joins, window functions, or CTEs—and let the tool suggest the pandas equivalents. You’ll build a mental map that lets you translate on the fly, without opening a new tab every time. Trust me, after a few tries you’ll be converting on instinct, and your notebooks will stay clean and readable.

Ready to turn those SQL headaches into pandas power moves? Let’s dive in and turn that query into code you can run, tweak, and share with your team.

TL;DR

If you’ve ever stared at a SQL query and wished it could instantly become clean pandas code, this guide shows you exactly how to translate sql query to pandas dataframe code without manual rewrites.

We’ll walk through examples, flag pitfalls, and hand you a quick workflow that saves time daily.

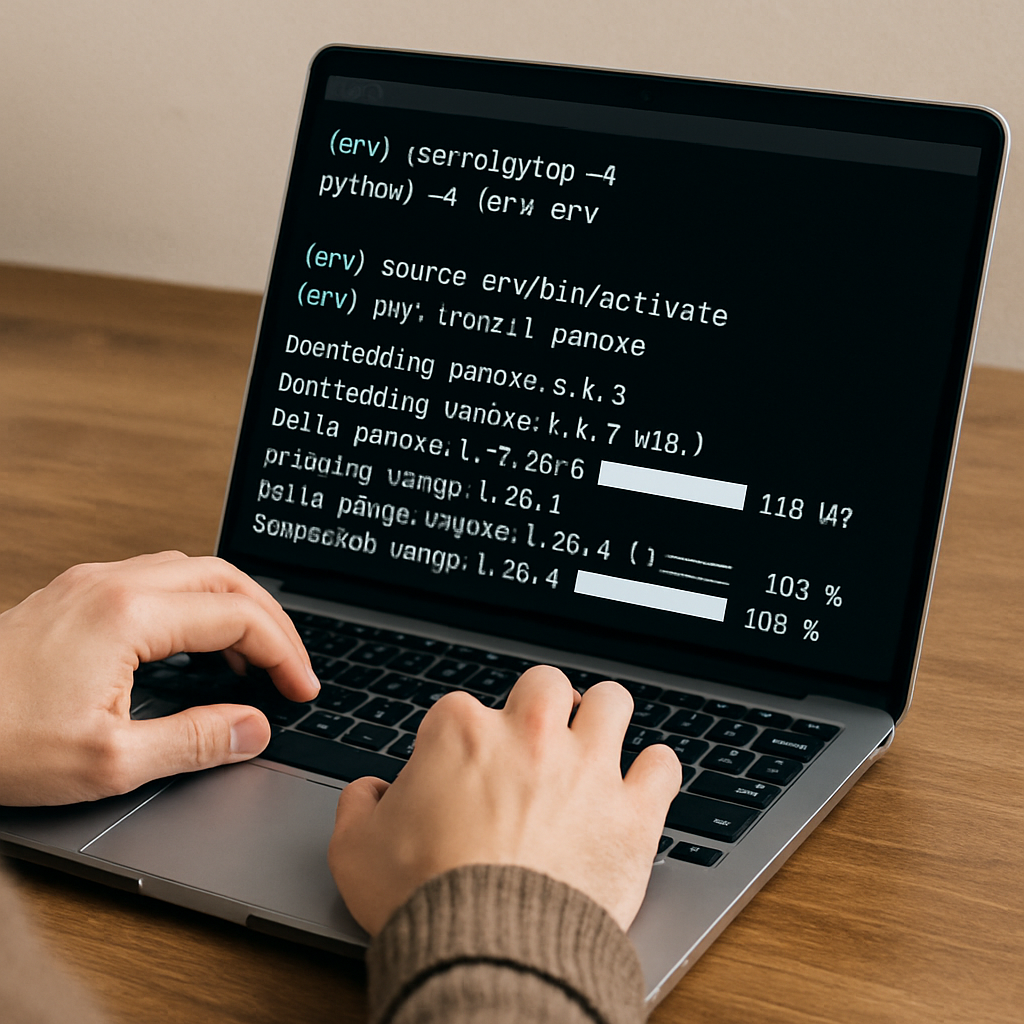

Step 1: Set Up Your Python Environment

Alright, before we start turning SQL into pandas, we need a tidy playground. Think of your Python environment as the kitchen where you’ll be chopping, sautéing, and plating data. If the kitchen’s a mess, the dish never turns out right.

First thing’s first: install Python 3.8 or newer. Most data‑science libraries, pandas included, have dropped support for older versions, and you’ll avoid a lot of cryptic “module not found” errors.

Got Python? Great. Now create an isolated space so your project’s packages don’t clash with anything else on your machine. Open a terminal and run:

python -m venv env

source env/bin/activate # macOS/Linux

.\env\Scripts\activate # WindowsThat little env folder is your sandbox. Whenever you see the (env) prefix in your prompt, you know you’re safe.

Next, fire up pip and pull in the essentials:

pip install pandas sqlalchemy jupyterlabpandas is the star, sqlalchemy lets you talk to databases without writing raw connectors, and jupyterlab gives you an interactive notebook where you can experiment line‑by‑line.

Do you prefer conda? No problem. The equivalent steps are:

conda create -n sql2pandas python=3.11 pandas sqlalchemy jupyterlab

conda activate sql2pandasChoose whichever tool feels natural; the end result is the same isolated environment.

Verify the setup

Run a quick sanity check. In the activated terminal, type:

python -c "import pandas, sqlalchemy; print(pandas.__version__, sqlalchemy.__version__)"If you see version numbers instead of an error, you’re good to go.

Now spin up a notebook:

jupyter labWhen the browser opens, create a new Python notebook and import pandas. If you can type pd. and see autocomplete, you’ve officially set the stage.

But what if you’re staring at a complex query and wish you had instant translation? That’s where SwapCode’s Code Explainer tool shines. Paste your SQL snippet, and it will spit out the pandas equivalent, saving you from the guesswork.

Tip: Keep a requirements file

As you add more libraries—maybe numpy, matplotlib, or scikit‑learn—record them in a requirements.txt:

pip freeze > requirements.txtThis way anyone on your team can replicate the exact environment with a single pip install -r requirements.txt. Consistency is king when you start sharing notebooks across the org.

And finally, a quick habit: after you finish a session, deactivate the virtual environment with deactivate. It keeps your global shell clean and reminds you to reactivate next time.

Pick an editor you love. VS Code, PyCharm, or even the classic JupyterLab interface all play nicely with pandas. In VS Code, install the Python extension and enable IntelliSense – it will auto‑suggest DataFrame methods as you type, which feels like having a seasoned teammate whispering the right attribute names.

Inside JupyterLab you can add the jupyterlab‑pandas-profiling extension. One click gives you a full data‑profile report – missing‑value heatmaps, distributions, and correlation matrices – so you instantly see if the DataFrame you just built matches the shape of the original SQL table.

A quick sanity test is to load a tiny CSV that mimics your table schema, run the pandas translation, and compare row counts. If the numbers line up, you’ve likely captured the WHERE and GROUP BY logic correctly.

So, to recap: install a recent Python, spin up a virtual env, install pandas, sqlalchemy, and Jupyter, verify everything works, and keep a requirements.txt handy. With that foundation, translating SQL to pandas becomes a smooth, repeatable process rather than a frantic scramble.

Step 2: Connect to Your Database and Retrieve Data

Alright, you’ve got your virtual env humming and pandas installed—now it’s time to actually talk to the database. The moment you fire up a connection string, you’re bridging two worlds: raw SQL on the server side and a tidy DataFrame on your notebook.

First thing’s first: decide which DB driver you need. For SQLite it’s built‑in, for PostgreSQL you’ll probably pip install psycopg2‑binary, and for MySQL you’ll want pymysql or mysql‑connector‑python. The driver choice determines the URL format you pass to create_engine().

Crafting the engine

SQLAlchemy’s create_engine is your gateway. A minimal example looks like this:

from sqlalchemy import create_engine

engine = create_engine('postgresql+psycopg2://user:password@localhost:5432/mydb')

Swap out postgresql+psycopg2 for mysql+pymysql or sqlite:///example.db depending on your stack. Once the engine object lives in memory, pandas can pull data with a single call.

Running the query

Remember that “translate sql query to pandas dataframe code” mantra? The simplest translation is just wrapping your SQL in pd.read_sql():

import pandas as pd

df = pd.read_sql('SELECT * FROM sales WHERE date >= %s', engine, params=('2023-10-01',))

Notice the params argument? It lets you avoid string‑concatenation and keeps your code safe from SQL injection. If you’re using raw strings, the query still works, but the param style is the best practice.

Behind the scenes, read_sql asks the engine for a DB‑API cursor, runs the statement, fetches all rows, and hands a dict‑like ResultProxy over to pandas, which builds the DataFrame automatically. This flow is described in a Stack Overflow discussion that walks through the exact steps.

Dealing with ResultProxy quirks

If you ever peek at the raw result, you’ll see a ResultProxy object. It behaves like a dictionary, so you could manually convert it with pd.DataFrame.from_records(res.fetchall()), but read_sql does that for you in one line. The shortcut saves you a handful of lines and keeps the code readable.

Sometimes you need column type hints—pandas will infer dtypes, but you can coerce them after the fact:

df['date'] = pd.to_datetime(df['date'])

df['revenue'] = df['revenue'].astype('float')

These conversions are cheap compared to the round‑trip to the database, and they give you full control over the resulting DataFrame.

Testing the connection

Before you dive into a massive query, run a sanity check. A tiny “SELECT 1” proves the engine works and that pandas can ingest the result:

test = pd.read_sql('SELECT 1 AS sanity', engine)

print(test)

If you see a one‑row DataFrame with the column “sanity”, you’re golden. If not, double‑check your driver installation and the connection string format.

Tip: Use SwapCode’s Code Generator

When you have a complex SELECT with joins, window functions, or CTEs, typing the exact read_sql call can be tedious. The SwapCode Code Generator can spin out a ready‑to‑paste snippet that includes the engine setup, parameter placeholders, and even basic dtype conversions. Paste your raw SQL, hit generate, and drop the output straight into your notebook.

Checklist before you move on

- Installed the correct DB driver (psycopg2, pymysql, etc.).

- Built a SQLAlchemy engine with the right URL.

- Ran a simple

SELECT 1test viapd.read_sql. - Confirmed the DataFrame appears with expected columns.

- Handled any datatype quirks (dates, decimals).

Performance tip: don’t pull entire tables if you only need a slice. Use WHERE clauses, LIMIT, or even server‑side aggregates to shrink the result set before it hits pandas. If you’re dealing with millions of rows, consider fetching in chunks with the chunksize argument of read_sql. This returns an iterator of DataFrames you can process batch‑wise, keeping memory usage low.

Once every box is ticked, you’re set to feed real‑world queries into the translator and watch pandas do the heavy lifting. The next step will show you how to take that DataFrame and start shaping it with .loc, .groupby, and other pandas tricks.

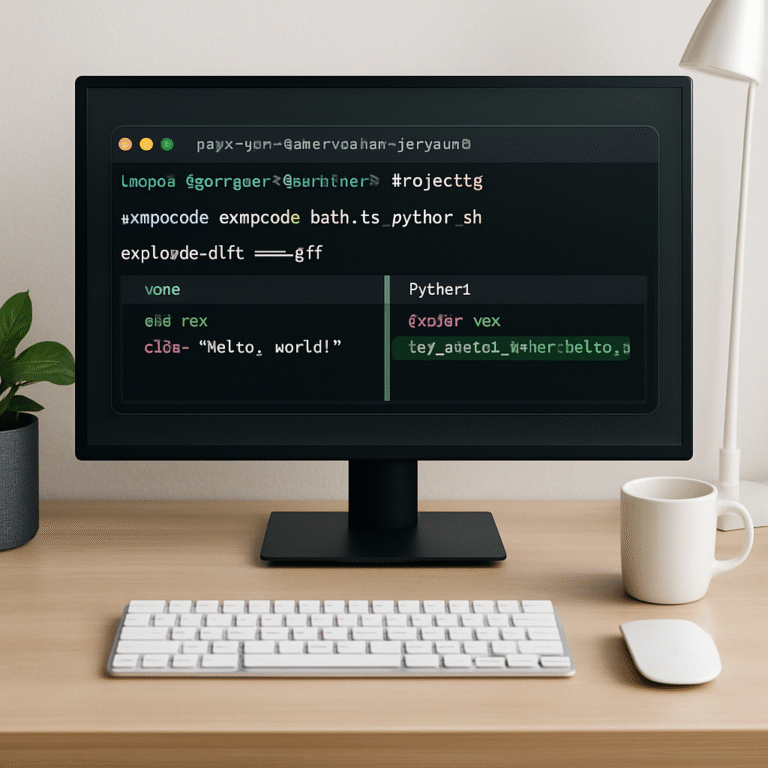

Step 3: Map SQL SELECT Clauses to Pandas Operations

Alright, you’ve pulled the raw rows into a DataFrame – now the real fun begins. This is where you start mimicking the SELECT list, WHERE filters, GROUP BY aggregates, and even joins, all with pandas methods.

Pick the columns you need

In SQL you’d write SELECT col1, col2 FROM tbl. In pandas you simply slice the DataFrame with a list of column names:

df_sel = df[["col1", "col2"]]

The official pandas‑SQL comparison page notes that this list‑style selection mirrors the SQL * or explicit column list as shown in the docs.

Filter rows like a WHERE clause

SQL’s WHERE becomes a boolean mask. Want rows where status = 'active' and score > 10? You chain conditions with & (AND) or | (OR):

mask = (df["status"] == "active") & (df["score"] > 10)

filtered = df[mask]

If you need an IN test, pandas’ isin is the go‑to. It’s the fastest way to replicate SQL’s IN (…) according to a Stack Overflow answer here:

active_codes = ["A", "B", "C"]

filtered = df[df["code"].isin(active_codes)]

Negate it with ~ for a NOT IN equivalent.

Group and aggregate like GROUP BY

SQL’s GROUP BY region, product followed by SUM(sales) translates to pandas’ groupby plus agg or sum:

summary = (

df

.groupby(["region", "product"])["sales"]

.sum()

.reset_index()

)

The docs point out that groupby().agg() can apply multiple functions at once, just like you’d write multiple aggregate columns in SQL.

Join tables the pandas way

When you’d normally write FROM a JOIN b ON a.id = b.id, pandas offers merge. It’s flexible – you can specify how='inner', 'left', 'right', or 'outer' to match the SQL join type.

merged = a_df.merge(b_df, on="id", how="inner")

Remember, pandas joins on index by default, so explicitly naming the key column avoids the surprising “null‑matches” pitfall mentioned in the comparison guide.

Putting it all together

Let’s walk through a mini end‑to‑end example. Suppose you have a sales table and you want the total revenue per active customer for the last month.

# 1. Pull raw data (we already did this in Step 2)

# df = pd.read_sql(sql, engine)

# 2. Select only the columns we care about

sales = df[["customer_id", "date", "revenue", "status"]]

# 3. Filter to active customers and last month

mask = (sales["status"] == "active") & (sales["date"] >= "2023-10-01")

sales_month = sales[mask]

# 4. Group by customer and sum revenue

result = (

sales_month

.groupby("customer_id")['revenue']

.sum()

.reset_index(name='total_revenue')

)

That snippet is exactly what you’d type if you were hand‑translating the SQL SELECT customer_id, SUM(revenue) FROM sales WHERE status='active' AND date>=… GROUP BY customer_id. The pattern repeats for any complexity you throw at it.

If you ever feel stuck, the Free AI Code Generator can spin out the boilerplate for you – just paste the SQL and let the tool suggest the pandas skeleton.

Quick reference table

| SQL clause | Pandas equivalent | Key tip |

|---|---|---|

| SELECT col1, col2 | df[[“col1”, “col2”]] | Use a list to keep order. |

| WHERE condition | df[mask] where mask uses & | and .isin() | Chain multiple conditions with parentheses. |

| GROUP BY col → agg(func) | df.groupby(‘col’).agg(func) | Reset index if you need a flat DataFrame. |

| JOIN … ON … | df1.merge(df2, on=’key’, how=’inner/left/…’) | Specify how to match SQL join type. |

Now you’ve got a solid map from SQL SELECT syntax to pandas operations. The next step will show you how to chain these snippets into a clean, reusable function that you can drop into any notebook.

Step 4: Handle Joins and Aggregations in Pandas

Okay, we’ve got a clean DataFrame from the SELECT and WHERE steps. Now the real magic happens when you need to stitch tables together and roll‑up numbers – that’s where joins and aggregations live.

Ever felt that pang of panic when a SQL JOIN suddenly turns into a maze of merge calls? You’re not alone. The trick is to treat each join as a tiny conversation between two data frames, then let pandas do the heavy lifting.

1️⃣ Start with the right kind of join

First, ask yourself what you need: every row from the left table, only matching rows, or a full outer view? That decision maps directly to the how argument in merge.

“`python

orders = pd.read_sql(‘SELECT * FROM orders’, engine)

customers = pd.read_sql(‘SELECT * FROM customers’, engine)

# Inner join – only orders that have a matching customer

order_cust = orders.merge(customers, on=’customer_id’, how=’inner’)

“`

Swap the how to 'left' if you want every order, even those without a customer record, or 'outer' for a full picture. The most common mistake is forgetting to specify the key column when the column names differ – just use left_on and right_on.

Does that feel a bit like lining up puzzle pieces? It is. And once they click, you can move on to the aggregation stage.

2️⃣ Group, aggregate, and rename

Aggregations in pandas are essentially a groupby followed by one or more agg functions. The syntax is flexible enough to let you calculate sums, means, counts, or even custom lambdas in one pass.

“`python

# Total sales per customer and product category

summary = (

order_cust

.groupby([‘customer_id’, ‘category’])

.agg(total_sales=(‘price’, ‘sum’), order_count=(‘order_id’, ‘nunique’))

.reset_index()

)

“`

Notice the named aggregation syntax – it makes the resulting columns self‑describing, which saves you a rename step later.

What if you need a weighted average, like average discount weighted by quantity? You can drop a tiny lambda right inside agg:

“`python

summary = (

order_cust

.groupby(‘customer_id’)

.agg(weighted_discount=(‘discount’, lambda x: (x * order_cust.loc[x.index, ‘quantity’]).sum() / order_cust.loc[x.index, ‘quantity’].sum()))

)

“`

It looks a little messy, but it’s just Python doing the math you’d write in a sub‑query.

3️⃣ Chain joins and aggregates gracefully

In many real‑world scenarios you’ll join more than two tables before aggregating. The key is to keep each step readable. Break the chain into variables, and comment what each variable represents.

“`python

# Bring product details in

order_prod = order_cust.merge(products, on=’product_id’, how=’left’)

# Add shipping info

full_data = order_prod.merge(shipping, on=’order_id’, how=’left’)

# Now aggregate

final_report = (

full_data

.groupby([‘region’, ‘month’])

.agg(revenue=(‘price’, ‘sum’), shipments=(‘shipping_cost’, ‘sum’))

.reset_index()

)

“`

When you look back at the notebook, those variable names read like a story – “order_prod”, “full_data”, “final_report”. It’s much easier for a teammate (or future you) to follow.

Still stuck on a particularly gnarly join? The Free AI Code Debugger can spot mismatched keys, unexpected nulls, and even suggest the right how for your case.

4️⃣ Practical checklist before you run the cell

- Confirm the join key columns exist in both frames and have the same dtype.

- Run a quick

.head()after eachmergeto verify row counts. - Check for duplicated columns – pandas will suffix with

_xand_yif you forget to drop one. - Validate aggregation results against a known small‑sample query in your database.

That final sanity check is the safety net that stops you from shipping a report with inflated numbers.

Now you’ve got the toolbox to turn any multi‑table SQL query into a tidy pandas pipeline. In the next step we’ll wrap all these snippets into a reusable function, so you can paste a raw SQL string and get a polished DataFrame in seconds.

Step 5: Convert Complex Subqueries to Pandas Logic

Alright, we’ve already wrangled joins and basic aggregations. Now it’s time to tackle those gnarly subqueries that make you stare at the screen and wonder if you should just give up.

Ever opened a query and seen something like WHERE id IN (SELECT user_id FROM active_users) and thought, “How the heck do I do that in pandas?” You’re not alone. The good news is that every subquery has a pandas equivalent – you just need to break it down into bite‑size steps.

Why subqueries feel scary

SQL loves nesting because the engine can optimise it for you. Pandas, on the other hand, works with explicit DataFrames, so we have to recreate the nesting manually.

Think of a subquery as a mini‑report that feeds its result into the outer query. In pandas you’d run that mini‑report first, store the result in a temporary DataFrame, then merge or filter the main one.

Turn a simple IN‑subquery into a merge

Suppose you have a sales table and you only want rows where the customer_id appears in a list of “premium” customers stored in another table.

# SQL

SELECT * FROM sales

WHERE customer_id IN (SELECT id FROM premium_customers);

In pandas you’d pull the two tables, then filter with .isin() or, if you need extra columns from the sub‑query, do a left‑merge.

sales = pd.read_sql('SELECT * FROM sales', engine)

premium = pd.read_sql('SELECT id FROM premium_customers', engine)

# Option 1 – boolean mask

filtered = sales[sales['customer_id'].isin(premium['id'])]

# Option 2 – merge if you need extra fields

filtered = sales.merge(premium, left_on='customer_id', right_on='id', how='inner')

Both approaches give you the same result set, and you can choose the one that feels cleaner for your workflow.

Handling correlated subqueries with .apply

Correlated subqueries reference a column from the outer query, like “for each order, give me the max price of items in the same category”.

# SQL

SELECT o.id, o.category,

(SELECT MAX(price) FROM items i WHERE i.category = o.category) AS max_cat_price

FROM orders o;

In pandas you can group the items table once, then map the result back, or you can use .apply if the logic is truly row‑by‑row.

items = pd.read_sql('SELECT * FROM items', engine)

# Pre‑aggregate max price per category

max_price = items.groupby('category')['price'].max().reset_index().rename(columns={'price':'max_cat_price'})

orders = pd.read_sql('SELECT id, category FROM orders', engine)

# Merge the aggregated values

orders = orders.merge(max_price, on='category', how='left')

If the subquery can’t be expressed as a simple aggregation, you can fall back to .apply:

def max_price_in_cat(row):

return items.loc[items['category'] == row['category'], 'price'].max()

orders['max_cat_price'] = orders.apply(max_price_in_cat, axis=1)

It’s slower, but it mimics the correlated nature of the original SQL.

Aggregating with groupby to replace scalar subqueries

Scalar subqueries return a single value that you can use in a SELECT list or a WHERE clause. They’re often used for ratios or percentages.

# SQL

SELECT c.id,

c.sales,

(SELECT SUM(sales) FROM customers) AS total_sales,

c.sales / (SELECT SUM(sales) FROM customers) AS share

FROM customers c;

In pandas you’d compute the total once, then broadcast it.

customers = pd.read_sql('SELECT id, sales FROM customers', engine)

total_sales = customers['sales'].sum()

customers['total_sales'] = total_sales

customers['share'] = customers['sales'] / total_sales

Notice how the subquery disappears – we just reuse a Python variable.

Window‑function‑style subqueries with groupby + transform

When you see OVER (PARTITION BY …) in SQL, think groupby().transform() in pandas.

# SQL

SELECT id, region, sales,

SUM(sales) OVER (PARTITION BY region) AS regional_total

FROM sales_table;

Equivalent pandas:

sales = pd.read_sql('SELECT id, region, sales FROM sales_table', engine)

sales['regional_total'] = sales.groupby('region')['sales'].transform('sum')

This keeps the original row count intact, just like a window function.

Putting it together – a mini end‑to‑end example

Imagine you have three tables: orders, order_items, and products. You need the average order value for customers who bought a “premium” product in the last month.

# 1. Pull raw data

orders = pd.read_sql('SELECT * FROM orders WHERE order_date >= "2023-10-01"', engine)

items = pd.read_sql('SELECT * FROM order_items', engine)

products = pd.read_sql('SELECT id, tier FROM products', engine)

# 2. Flag premium items

premium_items = products[products['tier'] == 'premium'][['id']].rename(columns={'id':'product_id'})

items = items.merge(premium_items, on='product_id', how='inner')

# 3. Join back to orders (one‑to‑many)

order_premium = items.merge(orders, on='order_id', how='inner')

# 4. Compute order totals

order_totals = order_premium.groupby('order_id')['price'].sum().reset_index()

# 5. Average across all qualifying orders

avg_value = order_totals['price'].mean()

print(f"Average premium order value: {avg_value:.2f}")

If you get stuck at any step, SwapCode’s Code Explainer can walk you through the pandas equivalent of each subquery, showing exactly which columns to merge and where to apply groupby or transform.

Quick sanity‑check checklist

- Run the sub‑query DataFrame first and inspect

.head()– you’ll spot mismatched column names early. - Make sure join keys share the same dtype (int vs. string) before you

merge. - If you used

.apply, compare a few row results against the original SQL output. - For scalar subqueries, verify the broadcasted variable matches the SQL total by running a quick

df['col'].sum()check. - When using

transform, double‑check that the result length equals the original DataFrame length.

Once you’ve crossed those T’s and dotted those I’s, you’ve essentially turned any nested SQL logic into clean, testable pandas code. The next step will be to wrap all these pieces into a reusable function, so you can paste a raw SQL string and get a polished DataFrame in seconds.

Step 6: Optimize Performance and Validate Results

We finally have a working DataFrame that mirrors our original SQL query, but a raw translation can be a bit sluggish if we don’t give it a little TLC. This step is all about squeezing speed out of pandas and making sure the numbers we’re looking at are rock‑solid.

Why performance matters

Ever run a notebook and watch the kernel grind for minutes on a million‑row join? That feeling is the difference between a smooth workflow and a wasted afternoon. When you translate SQL to pandas, you’re swapping a server‑side optimizer for Python code that runs in your own memory space. That shift means you have to be intentional about memory usage and vectorized operations.

Chunk your reads

Instead of pulling an entire table with pd.read_sql(), use the chunksize argument. It returns an iterator of smaller DataFrames you can process one piece at a time.

chunks = pd.read_sql('SELECT * FROM big_table', engine, chunksize=100_000)

df = pd.concat([process(chunk) for chunk in chunks])This pattern keeps your RAM happy and gives you a natural place to apply early filters before the data ever hits pandas.

Leverage dtypes early

Strings and objects chew memory like nobody’s business. If a column is really a category (think “region” or “status”), cast it right after you load the chunk:

chunk['region'] = chunk['region'].astype('category')Categories store an integer code under the hood, shaving off both memory and CPU time for groupbys.

Prefer vectorized ops over .apply()

.apply() feels convenient, but it often falls back to Python loops. Whenever you can, rewrite the logic with built‑in pandas methods, .eval(), or .query(). For example:

# instead of:

df['discounted'] = df.apply(lambda r: r['price'] * (1 - r['discount']), axis=1)

# try:

df.eval('discounted = price * (1 - discount)', inplace=True)That tiny change can shave seconds off a 500k‑row operation.

Profile with %timeit and memory_usage()

Before you commit to a pipeline, drop a quick %timeit in a notebook cell and see how long each step takes. Pair it with df.memory_usage(deep=True).sum() to watch memory drift. If something spikes, you’ve found a hotspot worth refactoring.

Validate the results

Performance is great, but not if the numbers are off. The easiest sanity check is to compare a handful of aggregates against the original SQL output. Run the same SUM(), COUNT(), or AVG() in your database and then do:

assert round(df['revenue'].sum(), 2) == round(sql_total, 2)If the frames differ, the assertion will throw a clear diff, pointing you to the exact column or row that mis‑matched.

Spot‑check with samples

Pick 5‑10 random order IDs, pull the same rows directly from the database, and eyeball them side‑by‑side. It’s a quick way to catch subtle type conversions—like dates turning into strings—that can sneak in during pd.to_datetime() calls.

Automate the sanity‑check

You can wrap the validation steps into a tiny function you call after every major transformation:

def validate(df, sql_df, key='order_id'):

# ensure same row count

assert len(df) == len(sql_df), 'Row count mismatch'

# ensure key uniqueness

assert df[key].is_unique, 'Duplicate keys detected'

# compare aggregates

for col in ['price', 'quantity']:

assert df[col].sum() == sql_df[col].sum(), f'{col} total differs'Running this after each merge or groupby gives you early warnings instead of a nasty surprise at the end.

When to call in the AI helper

If you’re stuck tweaking a join for speed or unsure which columns to index, SwapCode’s Code Explainer can suggest performance‑friendly alternatives and point out hidden bottlenecks in your pandas pipeline.

Quick performance & validation checklist

- Read large tables in chunks and filter early.

- Convert string columns that act like enums to

categorydtype. - Replace

.apply()with vectorized expressions or.eval(). - Measure runtime with

%timeitand memory withmemory_usage(). - Cross‑check totals, counts, and averages against the source SQL.

- Use

assert_frame_equalfor full‑frame sanity. - Run a random sample comparison for date and type fidelity.

- Wrap validation logic in a reusable helper function.

If you’re iterating over the same source many times, cache the intermediate DataFrames to disk with df.to_parquet() or pickle. Loading a parquet file is orders of magnitude faster than re‑running the whole SQL‑to‑pandas conversion, and it preserves dtypes automatically.

Following these steps means your translated pandas code not only runs faster, but also gives you the confidence that every figure matches the original query. That’s the sweet spot where productivity meets reliability.

Conclusion

We’ve walked you through every step of how to translate sql query to pandas dataframe code, from setting up your env to handling tricky sub‑queries.

Now you probably feel that familiar mix of relief and “what’s next?”—that’s exactly what we want. You’ve seen how a single pd.read_sql call can replace a bulky SELECT, and how merges, groupbys, and transforms let you recreate joins and window functions without breaking a sweat.

Key takeaways

- Start with a clean virtual environment and a working engine.

- Use boolean masks and

.isin()for WHERE‑style filters. - Leverage

mergeand named aggregations to mirror JOIN and GROUP BY. - Break complex subqueries into temporary DataFrames, then stitch them back.

- Profile, bulk‑load with

method='multi', and always run a quick sanity check.

Does any of this feel like a lot to remember? Keep the checklist handy, and treat each piece as a reusable snippet you can drop into any notebook.

Finally, the real power comes when you let tools like SwapCode’s Code Explainer generate the boilerplate for you—so you spend more time analyzing results and less time typing.

Give it a try on your next report, and you’ll see how quickly the translation becomes second nature. Remember, every time you replace a manual copy‑paste with a few lines of pandas, you’re shaving minutes off a workflow that could scale to hours. Happy coding!

FAQ

How do I start translating a simple SELECT statement into pandas code?

First, pull the raw result with pd.read_sql. Wrap your SQL string in the call, pass the SQLAlchemy engine, and you get a DataFrame that mirrors the SELECT output. From there you can slice the columns you need with df[['col1','col2']]. This one‑line bridge replaces hand‑crafted loops and lets you see the data instantly, so you know you’re on the right track before you start reshaping.

What’s the best way to replicate a WHERE clause in pandas?

Think of a WHERE clause as a boolean mask. Build the mask with pandas comparison operators, combine multiple conditions with & or |, and then index the DataFrame: filtered = df[(df['status']=='active') & (df['score']>10)]. If you need an IN test, df['code'].isin(['A','B','C']) does the trick. This approach feels natural, and you can chain additional filters without rewriting the whole query.

How can I perform a JOIN between two tables using pandas?

Use merge. Align the key columns, choose the join type with the how argument (inner, left, right, outer), and you get a DataFrame that looks just like the SQL result. For example, orders.merge(customers, on='customer_id', how='inner') mimics FROM orders JOIN customers ON orders.customer_id = customers.id. Keep the intermediate DataFrames named clearly – it reads like a story.

What’s the pandas equivalent of GROUP BY with multiple aggregates?

Combine groupby with named aggregation: summary = df.groupby(['region','product']).agg(total_sales=('revenue','sum'), avg_price=('price','mean')).reset_index(). The named aggregation syntax creates self‑describing columns, so you don’t need a separate rename step. You can stack as many aggregate functions as you like, and pandas will return a tidy DataFrame ready for further analysis or export.

How do I translate a window function like ROW_NUMBER() into pandas?

Window functions become groupby + cumcount or transform. To get the latest order per customer, sort by date descending, then df['rank'] = df.sort_values('order_date', ascending=False).groupby('customer_id').cumcount() + 1. After that, filter df[df['rank']==1]. It feels a bit more code‑y than the SQL one‑liner, but the logic is transparent and easy to debug.

What should I watch out for when converting complex subqueries?

Break the subquery into its own DataFrame first – think of it as a temporary table. Run the inner SELECT with pd.read_sql, inspect the shape, then join or aggregate it just like any other frame. This step‑by‑step materialisation lets you verify each piece, catch datatype mismatches early, and avoid the “black‑box” feeling that sometimes creeps in with a single massive query.

How can I speed up the translation process for large result sets?

Fetch data in chunks: for chunk in pd.read_sql(sql, engine, chunksize=50_000): … and concatenate at the end. Use method='multi' or fast_executemany=True on the engine when you later write back with to_sql. Also, keep column dtypes tight – float32 instead of the default float64 can shave memory and improve speed. A quick sanity check after each major step keeps you from chasing silent data loss.